Generative Artificial Intelligence

| |

|---|---|

| Full Name | Generative Artificial Intelligence (GenAI) |

| Core Characteristics | Data-driven content creation, pattern learning, probabilistic output generation, multimodal flexibility |

| Developmental Origin | Arises from deep learning innovations in neural networks, particularly transformer architectures (e.g., GPT, BERT) |

| Primary Behaviors | Text completion, image generation, style transfer, data synthesis, code generation, deepfake creation |

| Role in Behavior | Enhances productivity, automates creative tasks, generates synthetic data, powers conversational agents |

| Associated Traits | High dimensionality, stochastic outputs, zero-shot generalization, model hallucination risks |

| Contrasts With | Discriminative AI, rule-based systems, classical symbolic AI |

| Associated Disciplines | Machine learning, computer vision, natural language processing, cognitive computing |

| Clinical Relevance | Used in diagnostic imaging synthesis, medical documentation, and digital therapeutics design |

| Sources: Vaswani et al. (2017), Brown et al. (2020), Bommasani et al. (2021) | |

Other Names

GenAI, generative machine learning, generative neural networks, synthetic intelligence

Definition

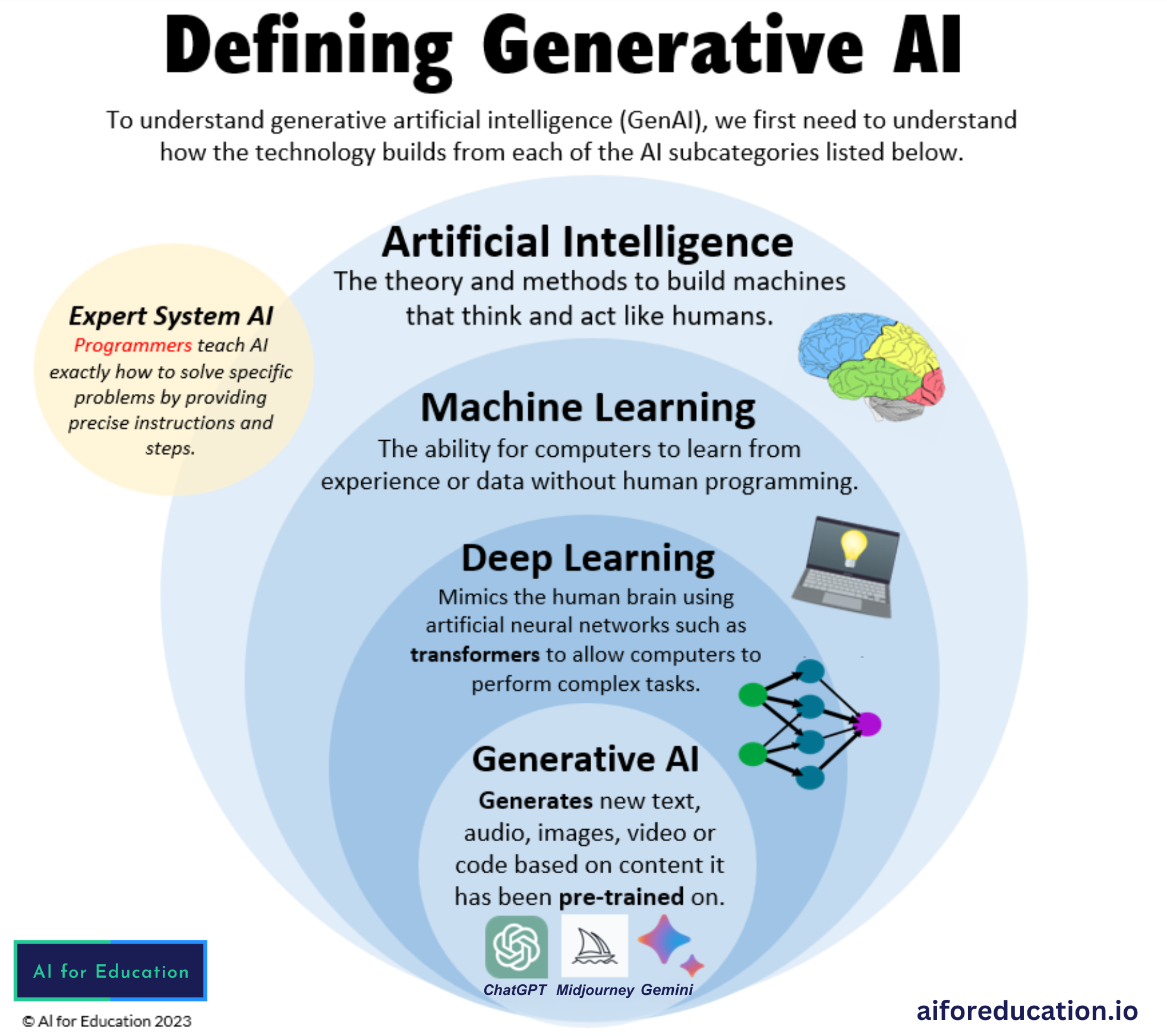

Generative Artificial Intelligence refers to algorithms capable of producing original content by learning patterns in existing data. Rather than classifying input, these systems create new outputs that resemble real-world data but are algorithmically generated.

History of Generative Artificial Intelligence

1950s–1980s: Symbolic Roots and Rule-Based Systems

Early AI focused on rule-based symbolic reasoning (e.g., ELIZA), incapable of generating novel data beyond pre-defined scripts. Creativity was considered out of reach.

1990s–2010s: Emergence of Generative Models

Advances in probabilistic models (e.g., Hidden Markov Models, Variational Autoencoders) laid the foundation for content generation, particularly in speech and image domains.

2014: GAN Breakthrough

Goodfellow et al. introduced Generative Adversarial Networks (GANs), enabling high-quality image synthesis. This sparked a surge in generative research across media types.

2017: Transformer Architecture

Vaswani et al. (2017) proposed the transformer, which became the backbone of large-scale generative models like GPT and DALL·E. These models could now generate coherent text, images, and more.

2020–2024: Foundation Models and Public Adoption

OpenAI’s GPT-3, Stability AI’s Stable Diffusion, and Google’s Imagen expanded Generative artificial intelligenceinto public tools. These systems transitioned from lab demos to real-world use in writing, art, coding, and customer service.

2025: Regulation and Human-AI Collaboration

Current focus includes:

- Alignment and hallucination control (OpenAI, Anthropic)

- AI co-authorship in publishing, art, and education

- European AI Act implications for generative systems

Mechanism

- Transformer models: Use attention mechanisms to learn long-range dependencies in data for more coherent output.

- Latent space sampling: Generate outputs by sampling from compressed representations of data distributions.

- Prompt conditioning: Direct outputs via textual, visual, or multimodal inputs that guide generation logic.

Psychology

- Anthropomorphism: Users may misattribute consciousness or intent to generative artificial intelligence outputs.

- Creativity augmentation: Tools like ChatGPT or Midjourney reshape how humans ideate, brainstorm, and express creativity.

- Bias amplification: Trained on human data, generative artificial intelligence may inherit and propagate cognitive biases or stereotypes.

- Decision outsourcing: Users may offload ideation, synthesis, or emotional labor onto algorithms.

Neuroscience

- Computational analogy: GenAI mirrors brain-like prediction and compression models (e.g., predictive coding).

- Working memory parallels: Transformers simulate short-term dependency management like the human prefrontal cortex.

- Imitation learning: Comparable to mirror neuron activity in humans, GenAI learns via modeling human examples.

- Reward modeling: Reinforcement learning frameworks mimic dopamine-based feedback systems in cognition.

Epidemiology

- As of 2024, over 100 million people interact with generative AI weekly (OpenAI internal estimates).

- Use highest among professionals in tech, education, marketing, and art.

- Global GenAI market estimated to exceed $100B by 2026, with sharp growth in Asia and North America.

Related Constructs to Generative Artificial Intelligence

| Construct | Relationship to Generative AI |

|---|---|

| Discriminative Models | Classify or label data, unlike generative models which synthesize new content. |

| Foundation Models | Large-scale generative systems pretrained on massive corpora for multitask generation. |

| Synthetic Media | Refers to any audio, visual, or text content created by generative algorithms. |

In the Media

Generative artificial intelligence dominates media discourse, from artistic disruption to misinformation. Popular portrayals toggle between awe and alarm:

- Film:

- Her (2013) – Explores AI companionship and the emotional realism of generated responses.

- The Creator (2023) – Depicts war between humans and sentient AI built from generative roots.

- Television:

- Black Mirror: Joan is Awful (2023) – Satirizes deepfake media and real-time content generation.

- Books:

- You Look Like a Thing and I Love You (Shane, 2019) – Explains neural network behavior through humor and absurd generated text.

- The Alignment Problem (Christian, 2020) – Documents the ethical challenges of aligning generative systems with human values.

Current Research Landscape

Generative artificial intelligence is among the most active domains in computer science. Research focuses on:

- Controlling hallucinations and misinformation in LLMs

- Ethical frameworks for synthetic media and authorship

- Advances in multimodal generation (e.g., text-to-video)

- Transforming Military Healthcare Education and Training: AI Integration for Future ReadinessPublished: 2025-05-03 Author(s): Justin G Peacock

- AI-Driven De Novo Design and Development of Nontoxic DYRK1A InhibitorsPublished: 2025-05-03 Author(s): Eduardo González García

- Taking the plunge together: A student-led faculty learning seminar series on artificial intelligencePublished: 2025-05-03 Author(s): Faria Munir

- The future of HIV diagnostics: an exemplar in infectious diseasesPublished: 2025-05-03 Author(s): Nitika Pant Pai

- AMPCliff: Quantitative definition and benchmarking of activity cliffs in antimicrobial peptidesPublished: 2025-05-03 Author(s): Kewei Li

FAQs

How is Generative AI different from regular AI?

Generative artificial intelligence differs from traditional AI in its core functionality and output. Traditional AI, or discriminative AI, analyzes existing data to classify, predict, or make decisions, such as identifying spam emails or recommending products. Generative AI, however, creates new, original content like text, images, or music by learning patterns from training data. It uses deep learning architectures, such as transformers or diffusion models, to generate outputs that resemble human-produced work. While traditional AI operates within predefined rules or datasets, generative AI synthesizes novel data, enabling applications like chatbots, art generation, and synthetic media. This distinction makes generative AI more versatile but also introduces challenges in accuracy, bias, and ethical use.

Is Generative AI creative?

Generative artificial intelligence exhibits a form of computational creativity, but it is fundamentally different from human creativity. Unlike humans, who create based on intent, emotion, and subjective experience, generative AI produces outputs by statistically modeling patterns in its training data. It can combine existing ideas in novel ways, generate realistic text, images, or music, and even mimic artistic styles leading to outputs that may appear creative. However, it lacks true understanding, intentionality, or originality, as its “creativity” is constrained by its training data and algorithms. While it can assist in creative tasks, its outputs are ultimately derived from learned correlations rather than genuine inspiration or conceptual innovation. Thus, while generative AI can simulate creativity, it does not possess creativity in the human sense.

What are the risks of Generative AI?

Generative artificial intelligence risks include misinformation through convincing deepfakes, amplified biases from training data, and privacy violations via regurgitated sensitive information. It enables scalable malicious uses like phishing and fraud while threatening jobs in creative fields. Overreliance may also reduce human critical thinking, and unclear IP ownership complicates legal frameworks. These dangers demand strict safeguards.

Can Generative AI replace human jobs?

Yes, generative artificial intelligence can replace certain human jobs, particularly those involving repetitive, rules-based tasks in content creation, customer service, and data processing. Roles like copywriting, graphic design, basic coding, and entry-level legal or financial analysis are most vulnerable, as AI can generate text, images, code, and reports with increasing accuracy. However, jobs requiring complex decision-making, emotional intelligence, or high creativity (e.g., strategic leadership, advanced research, or original art) are harder to automate fully.

While AI will displace some jobs, it’s more likely to augment others including handling routine tasks while humans focus on oversight, refinement, and innovation. The net impact depends on workforce adaptation, with reskilling and AI-human collaboration becoming critical.